BayesLoRA: Task-Specific Guardrails for Reliable Agents

At Invoke Labs, we believe the future of AI lies in agents that can not only act autonomously but also recognize when they’re out of their depth. In complex, real-world settings—even minor mistakes can cascade into serious failures. That’s why uncertainty quantification (UQ) is essential: it lets an agent say “I’m not sure” and trigger a safe fallback rather than forging ahead blindly.

The Challenge of Agentic Workflows

Modern agentic systems plan and execute multi-step tasks—everything from scheduling meetings to guiding robots through a warehouse. While large language models (LLMs) have made these agents astonishingly capable, they can still falter unexpectedly. An agent might misinterpret a query, hallucinate details, or encounter inputs far outside its training. Without a built-in measure of confidence, there’s no reliable way to detect when to pause, ask for clarification, or seek human oversight.

Why General-Purpose Uncertainty Falls Short

Many existing UQ methods attempt to wrap uncertainty around the entire model—often by ensembling multiple large models, applying heavy Bayesian inference, or enabling dropout across every transformer layer. These approaches tend to be either too coarse or too computationally expensive for real-time agents. Worse, they trigger on any shift in the backbone—even if that shift has nothing to do with the specific task at hand—leading to false alarms or missed flags in truly novel situations.

Introducing BayesLoRA

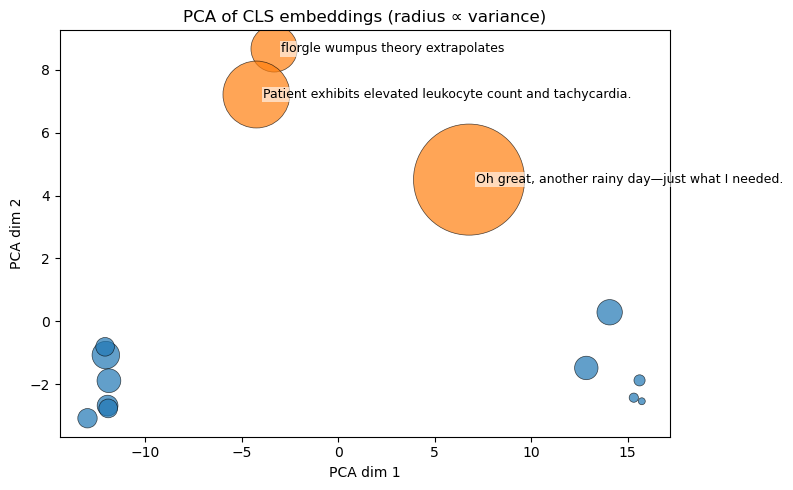

To address this gap, we developed BayesLoRA, a lightweight framework that delivers task-specific uncertainty by focusing MC-Dropout exclusively on the small adapter modules used for fine-tuning. Instead of perturbing the entire model, BayesLoRA zeroes in on the low-rank updates (LoRA adapters) that encode task-specific knowledge. The result is a crisp confidence signal: near zero variance on well-covered inputs, and a clear spike in uncertainty on out-of-distribution or ambiguous examples.

How BayesLoRA Works (at a Glance)

- LoRA Adapters: During fine-tuning, we learn small, low-rank matrices that adapt the frozen backbone to a new task. These adapters typically include dropout for regularization.

- MC-Dropout Inference: At runtime, we retain that dropout and perform a handful (e.g. 20) of stochastic forward passes through the adapters only, keeping the base model weights fixed.

- Mean & Variance: We compute the average prediction and its variance across those passes. Low variance means the adapter “knows” the input; high variance signals uncertainty.

- Guardrail Policy: An agent can then compare variance against thresholds—autonomously proceeding when confident, querying for more information when borderline, or escalating to a human when uncertainty is high.

Why Task-Specific Guardrails Matter

By localizing uncertainty to the adapters, BayesLoRA avoids the noise and overhead of backbone-wide methods. The uncertainty it measures is directly tied to the fine-tuned task subspace—so it doesn’t cry wolf on irrelevant distributional shifts. In practice, this sharp focus enables agents to escalate precisely when their newly learned knowledge is insufficient, rather than on every minor model perturbation.

Real-World Validation

In our prototype experiments on sentiment analysis, BayesLoRA delivered near-zero variance on in-domain movie reviews, while clearly flagging:

- Ambiguous sentences (“I’ve seen better films”)

- Domain-shift inputs from finance (“The quarterly earnings exceeded expectations”)

- Gibberish tokens (“florgle wumpus theory extrapolates”)

These results confirm that even modest-rank adapters (e.g. rank 64) can provide actionable uncertainty signals with minimal compute overhead.

Impact for Invoke Agents

Integrating BayesLoRA into our Invoke agent framework means built-in introspection:

- Autonomous steps when confidence is high

- Clarification requests when the agent is unsure

- Human escalation before acting in truly uncertain scenarios

This layered approach to decision-making transforms our agents from “silent performers” into collaborative partners that know their limits.

What’s Next

We’re currently rolling out BayesLoRA across several pilot projects at Invoke Labs:

- Knowledge-base assistants that defer when the topic is off-book

- Robotic controllers that pause motion plans under semantic uncertainty

- Customer-support bots that blend autonomous responses with expert handoffs

Over the coming months, we’ll expand BayesLoRA to additional tasks, refine its policy thresholds, and explore hybrid strategies that combine adapter-level uncertainty with lightweight backbone signals.

Read the full pre-print and explore the code on GitHub:

BayesLoRA: Task-Specific Uncertainty in Low-Rank Adapters

https://github.com/mercury0100/bayeslora

The full paper will be submitted for review to ICLR.

By equipping agents with the ability to say “I’m not sure,” BayesLoRA brings us one step closer to AI systems that are both powerful and trustworthy. We’re excited to share this work as part of Invoke Labs’ commitment to safe, reliable, and intelligent automation.